While specific pricing details may vary, full access to advanced features usually requires a paid subscription. The platform currently charges credits even for generating short, preview animations, making it costly for users to test different versions of their content. A common request among users is for the introduction of low-resolution, watermarked previews that could allow for more affordable testing and iteration before committing to high-resolution final versions. Without this option, users run the risk of spending credits on previews that ultimately don’t meet their expectations. The AI generates visuals frame-by-frame, but it may struggle to maintain a holistic understanding of the entire scene.

The model is overseen by Mustafa Suleyman, recently hired co-founder of Google DeepMind. OpenAI will use OverflowAPI to improve model performance and provide attribution to the Stack Overflow community within ChatGPT. Stack Overflow will use OpenAI models to develop OverflowAI and to maximize model performance. The company is working closely with TSMC (Taiwan Semiconductor Manufacturing Co) to design and produce these chips, although the timeline for launch is uncertain. With this move, the company aims to keep up with rivals like Microsoft and Meta, who have made significant investments in generative AI.

The model is also available on Hugging Face for anyone who wants to download the weights and dive deeper into its architecture. Genmo’s dedication to open access means that creators, researchers, and developers can use this model freely for both personal and commercial purposes, driving forward the democratization of AI tools. In some edge cases with extreme motion, minor warping and distortions can also occur. Mochi 1 is also optimized for photorealistic styles so does not perform well with animated content. We also anticipate that the community will fine-tune the model to suit various aesthetic preferences.

Databricks has released DBRX, a family of open-source large language models setting a new standard for performance and efficiency. The series includes DBRX Base and DBRX Instruct, a fine-tuned version designed for few-turn interactions. Developed by Databricks’ Mosaic AI team and trained using NVIDIA DGX Cloud, these models leverage an optimized mixture-of-experts (MoE) architecture based on the MegaBlocks open-source project. This architecture allows DBRX to achieve up to twice the compute efficiency of other leading LLMs. Google DeepMind has developed an AI system called Search-Augmented Factuality Evaluator (SAFE) that can evaluate the accuracy of information generated by large language models more effectively than human fact-checkers.

OpenAI recently introduced a game-changing feature in ChatGPT that lets you analyze, visualize, and interact with your data without the need for complex formulas or coding. OpenAI has formed content and product partnerships with Vox Media and The Atlantic. OpenAI will license content from these media powerhouses for inclusion in the chatbot’s responses. AMD CEO Lisa Su introduced new AI processors at Computex, including the MI325X accelerator, set to be available in Q4 2024. These announcements show how eager Nvidia is to retain its position as a leader in the AI hardware market. In addition to pushing the acceleration of AI chips, Nvidia is developing new tools to shape AI’s implementation in multiple sectors.

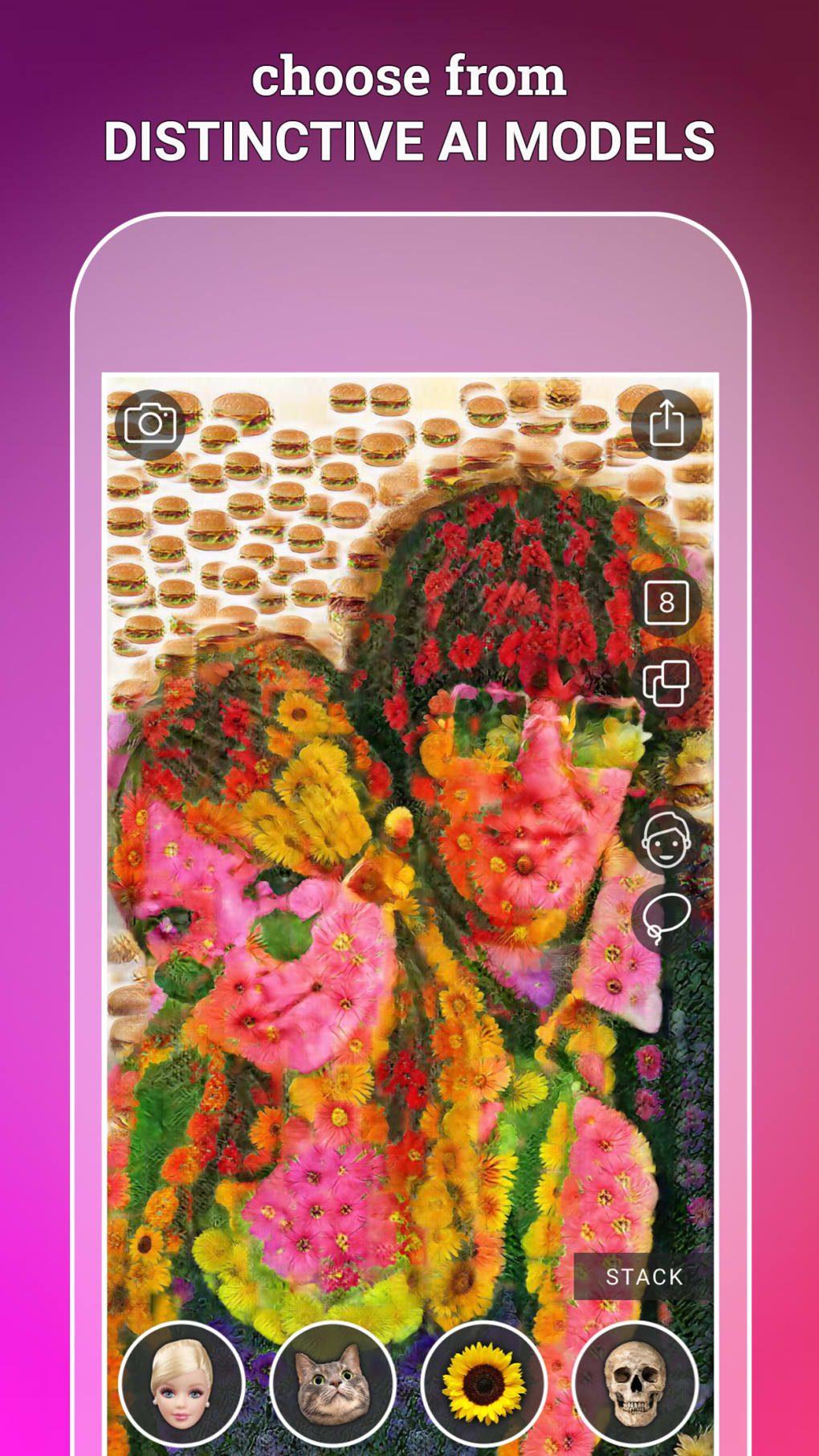

In this AI YOUniverse video, the host introduces Alpha.genmo ai.ai, a text-to-video AI tool that enables users to create stunning videos for free. The platform, genmo ai video, allows for the creation and sharing of interactive, immersive generative art, including videos and animations. To use Alpha.genmo.ai, sign in with a Google or Discord account and explore examples of other users’ work. Users can create videos by inserting prompts, adjusting settings, and making changes to the artistic style. Genmo is making waves in the video generation world with its latest release,Mochi 1, an open-source video generation model with impressive abilities to create smooth, realistic motion and adhere to textual prompts. If you’ve been following the advancements in AI for video, Mochi 1 brings an exciting new level of quality to open-source models, making it a powerful alternative to the industry’s closed systems.

Whether you’re seeking education, entertainment, or an interactive experience, Read Aloud For Me is your gateway to the future of family-friendly digital interaction. Our multilingual stories celebrate the rich heritage of children from all corners of the globe, ensuring every child sees themselves in the magic of storytelling. This concept could change EA’s business model, creating a more interactive and dynamic relationship with their player base while possibly unlocking new revenue streams and extending the lifespan of games. However, it’s just aconcept video—only time will tell what the future of video game creation will truly look like.

Whether you’re seeking education, entertainment, or an interactive experience, Read Aloud For Me is your gateway to the future of family-friendly digital interaction. Our multilingual stories celebrate the rich heritage of children from all corners of the globe, ensuring every child sees themselves in the magic of storytelling. This concept could change EA’s business model, creating a more interactive and dynamic relationship with their player base while possibly unlocking new revenue streams and extending the lifespan of games. However, it’s just aconcept video—only time will tell what the future of video game creation will truly look like.